Designing Participation Assessments

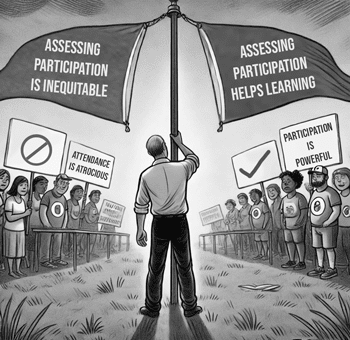

Like homework, the question of whether to give students "credit" for their participation in course activities is hotly contested. One camp says that assessing students for participation is inequitable because diverse populations of students can't be expected to be present in class every single day. The other camp says that assessing participation (peer instruction and the like) is beneficial because it actually improves student performance overall.

Pragmatically, we pitch our tent smack dab in the middle - halfway between the two camps. We agree that expecting 100% attendance is inequitable AND we agree that participation in course activities helps students learn. Where you pitch your tent is up to you!

If you choose to assess participation, though, how can you make the set of opportunities equitable? Make a lot of them!

Lots & Lots of Opportunities

Let's do some visualization. Imagine a bucket. We imagine an aluminum bucket, so that when we drop something inside, we get an echoing ping of satisfaction. Now, in this bucket, drop all of the activities you expect your students to do. Participating in peer instruction polling? Ping, ping, ping! Writing discussion posts? Ping, ping! Required reading? Ping! Add as many opportunities as possible into the bucket.

Now imagine your students reaching into the bucket and grabbing as many opportunities as they can hold in their hands. Did they grab everything in the bucket? Probably not. That's unrealistic. But did two giant handfuls of seized opportunity meet your expectations for participation in your course? Probably!

The more opportunities of different shapes and sizes that you add to your bucket, the more students will be able to fit in their hands. Consider:

- in-class polling opportunities,

- reading reflections,

- hands-on lab activities,

- visiting office hours,

- sharing notes taken in class,

- asking/answering questions on the course forum, etc.

Imagine a student that does almost all of the required readings but misses the peer instruction activities for five days because they have mono and can't come to class. They emailed you some questions about your slides and asked if you could connect them to another student who might be willing to share their notes. Did this student participate enough in course activities to support their learning? Probably.

Now imagine a student that attended class every day but missed a few of the discussion posts because they have track meets on the weekends and the WiFi on the bus is totally unreliable. Did this student participate enough to support their learning? Probably.

Participation & Grade Calculations

That's the question to keep in mind. Did the student participate enough to support their learning? You'll need to determine how much participation is necessary for students to master the course objectives. Maybe to earn an A+, students need to complete 85% of the participation opportunities. To earn an A, students need to complete 80%. Choose thresholds for each grade distinction that make sense for you. Be generous with your thresholds. Remember that your bucket of opportunities is big and students only need to seize enough to support their mastery.

How will you know if your grade distinction thresholds are calibrated appropriately? You'll know they're appropriate if they rarely matter. Recall that students must meet all requirements of a grade distinction in order to earn that grade distinction. This means that a student's grade distinction is determined by their weakest area of mastery. In our experience, participation is almost never the weakest area of mastery. Low evidence of participation is usually correlated with low mastery of the course objectives, which should be clear elsewhere. Students can't learn if they don't participate in their learning. It's as simple as that.

Participation Assessment Policies

If you're concerned about the overhead of assessing a bucketful of participation opportunities, consider how you can simplify the assessment process for yourself. You can use a simplistic mastery level scheme, like the two-level Effortful Engagement scheme that we defined earlier in this tutorial. You can use auto-graders or assess-all-students-at-once functions in your gradebook software. (Shameless Plug: Are you using TeachFront as your gradebook software? You should be using TeachFront as your gradebook software.) You can use peer assessment techniques or even self-reporting. You can choose not to entertain resubmissions.

Here's what we do. We choose to assess all participation opportunities with a binary mastery level scheme: students earn either "Complete" or "Incomplete." However, we randomly choose an assessment process for each opportunity. Students don't know which of our processes we're going to use, which keeps them on their toes a bit.

- Sometimes we look at every artifact, take a quick glance to see if the student effortfully engaged.

- Sometimes we don't look at the artifacts at all, simply giving a "Complete" rating if the student turned something (anything) in.

- Sometimes, we comb through artifacts with greater detail. We look for effortful engagement in every nook and cranny of the assignment. Only fully complete artifacts earn the "Complete" rating. The rest get feedback that their submission did not show adequate effort.

Because we offer flexibility in the quantity of participation opportunities required for each grade distinction, we don't allow students to resubmit participation work.

The low-stakes but single-shot nature of our participation opportunities coupled with the randomized accountability of our assessment practices helps to limit student shenanigans. If, in one of our deeper comb-throughs, we find that a student is trying to deceive us, we handle the situation individually with the student, as we would with any academic integrity violation. "Gaming the system" is academic dishonesty and is not tolerated in our classrooms.

Looking Forward

In the next section, we'll talk about how to streamline your assessment processes. Let's go!